Recommended talks from KubeCon EU 2021

Two weeks ago I (virtually) attended KubeCon + CloudNativeCon EU 2021. There has been a plethora of talks and I wanted to share a selection of the ones I think are worth your time. Of course, I have not watched all of the talks and there is a good chance I have missed an interesting one. In that case: please let me know!

In addition to the talks themselves, I also enjoyed the “Office Hours” of awesome projects in the cloud-native space, such as Crossplane and KEDA.

Click on the screenshots below to get to the videos.

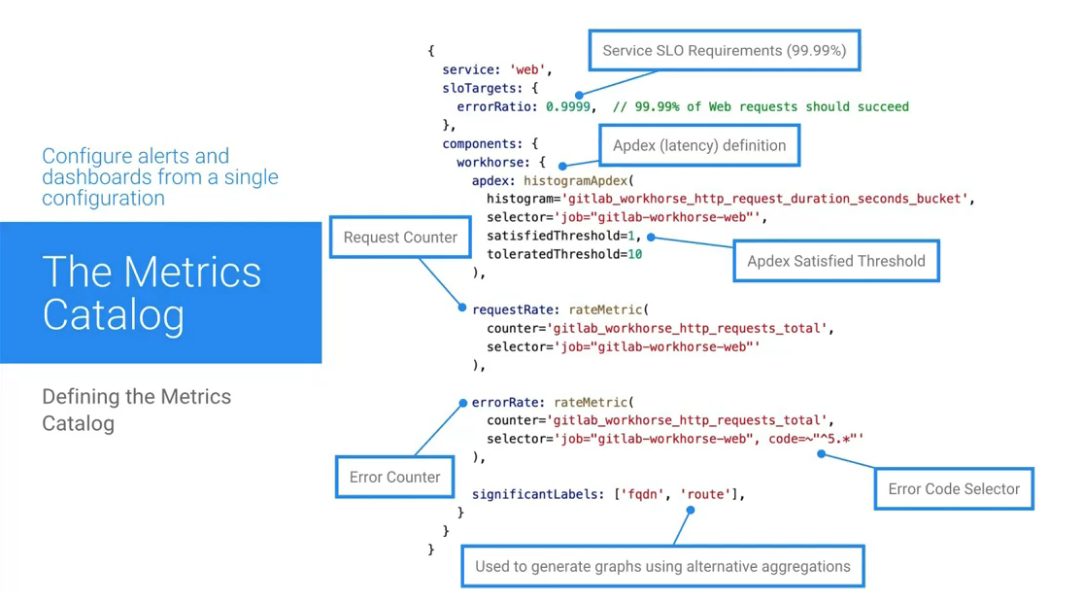

# Effective infrastructure monitoring of an entire organization

Prometheus, Grafana & Co. are really nice tools, but their configuration needs to be maintained and integrated well. For this purpose, Gitlab developed the “Metrics Catalog”: a single source-of-truth that manages metrics, SLAs, alerting rules and dashboards.

This was my favorite talk from the entire conference. The level of integration between development, operation and on-call is incredible - actual DevOps!

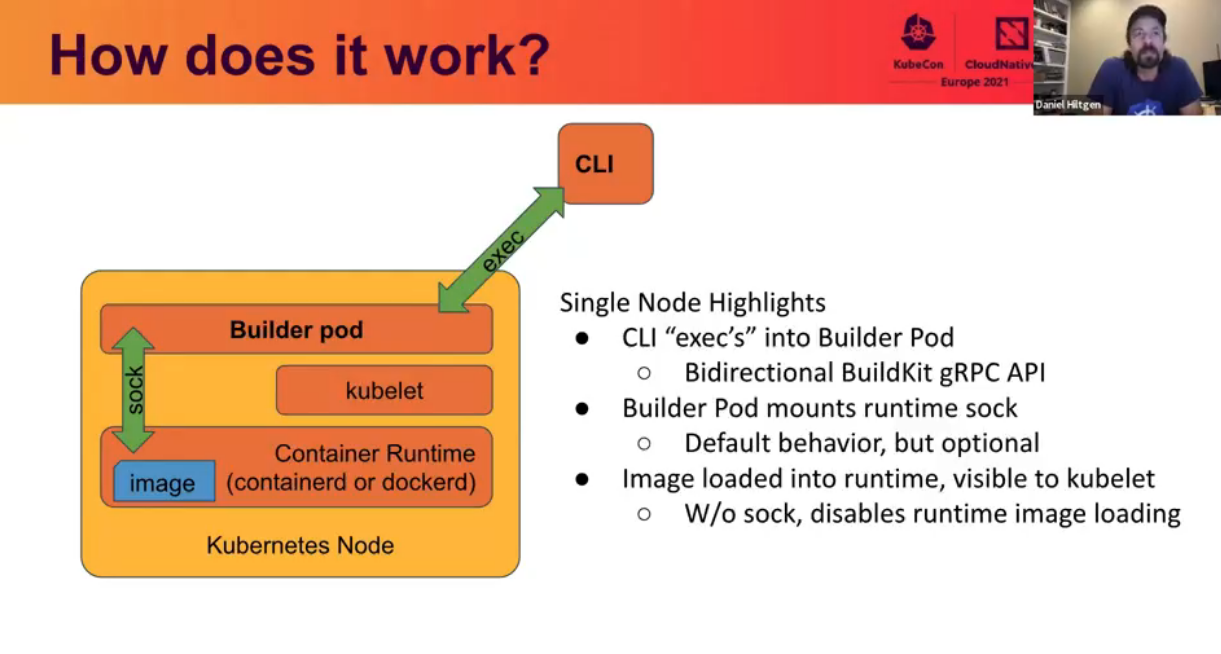

# kubectl build

Docker is an excellent development tool, but it doesn’t fit with the modern way of Kubernetes anymore.

When developing an application locally, you first need to build it locally, push the image to a remote cluster and then you can use it in Kubernetes.

With kubectl build this process becomes one simple step.

It offers the same UX as Docker, but directly works with BuildKit and containerd.

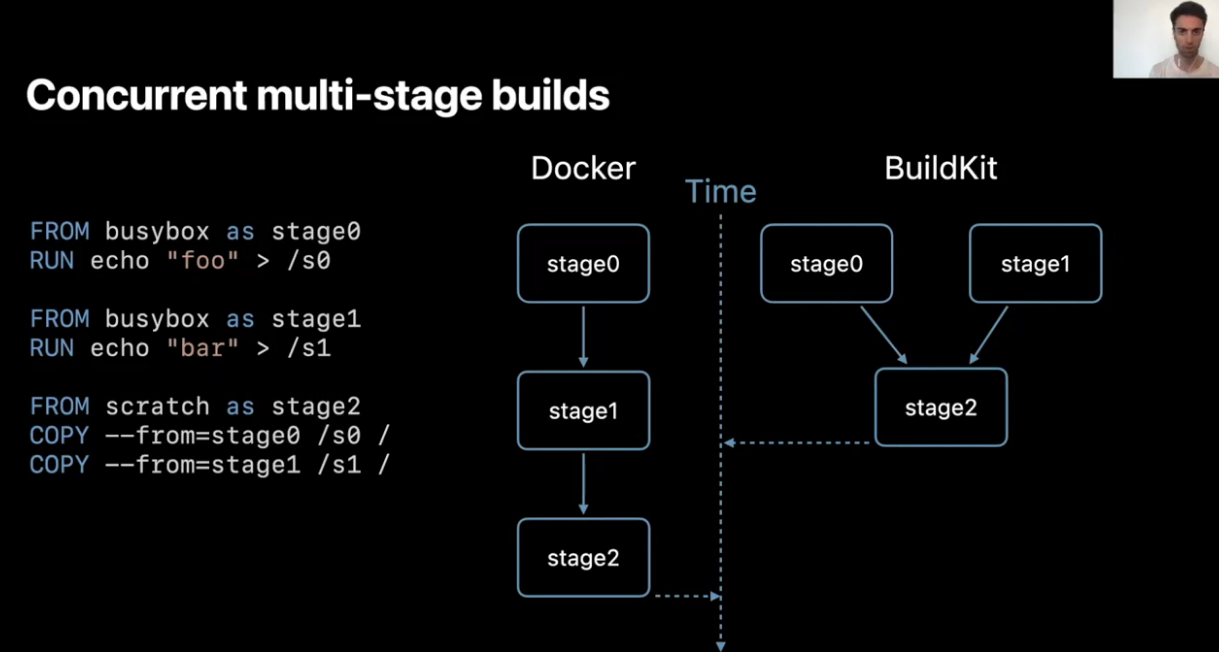

# BuildKit Deep-dive

Gautier Delorme outlined the features of BuildKit (better caching for image builds, build-time secrets, multiple output formats) and how Apple is leveraging these in their “BuildKit farm” running on Kubernetes, which builds images for CI and production.

# Kubernetes Selectors

We are surely all familiar with Kubernetes selectors, but this presentation by Christopher Hanson gave some great examples of more advanced use-cases for slicing and dicing your pods and services.

# Cluster Autoscaler Deep-dive

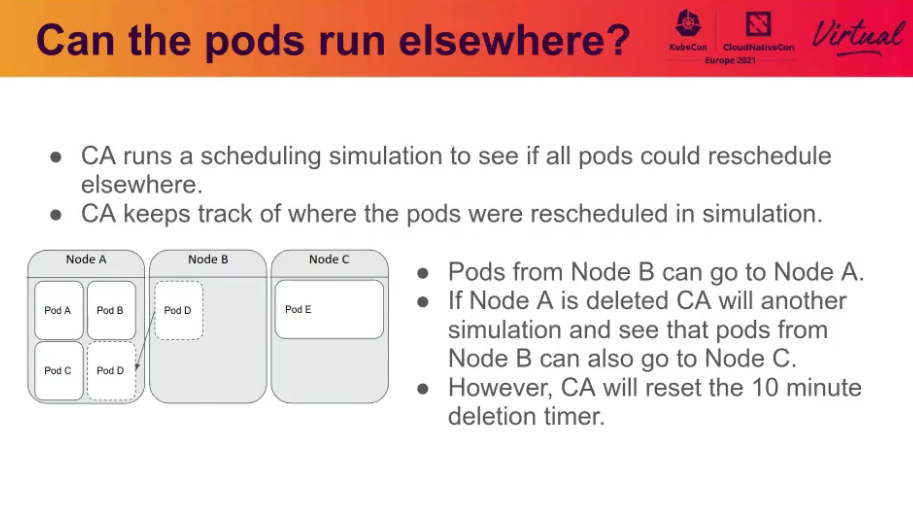

The Cluster Autoscaler dynamically adds and removes nodes from a Kubernetes cluster based on the utilization of the nodes. Figuring out a when to add a new node is easy. Knowing when to remove a node - and which one - is more difficult. And that is exactly the topic of this deep-dive session.

# Gateway API

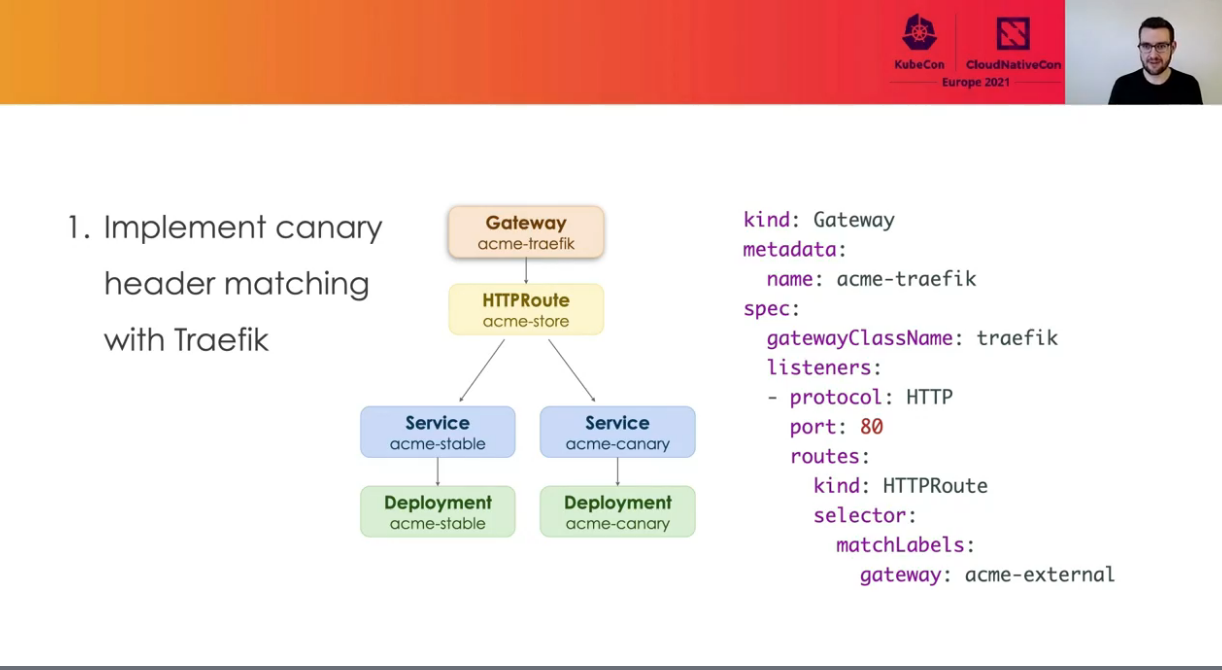

The Ingress API is one of the oldest APIs in Kubernetes. It is rather simple and not very flexible. Lots of workarounds have been developed to enable more advanced use-cases (mostly via annotations), but they are always implementation specific (Nginx, Traefik etc.). The new Gateway API standardizes many of these features into a API for Services and Ingresses - and it can even load-balance across multiple clusters!

# Kubernetes Sidecars in the Wild

Sidecars have become a rather trendy pattern over the last couple of years. However, the sidecar primitives in Kubernetes are pretty basic: they don’t support startup / shutdown ordering and are not usable with jobs or init containers. Netflix has run up against these limitations and is looking for ways to extend and enhance sidecar support in Kubernetes.

# Hacking Kubernetes Security

I enjoyed this presentation because it took advantage of the fact that all talks are pre-recorded and thus tried something completely different. While the content is not very technical, there is nevertheless a lesson to be learned here.

# Smarter container runtimes

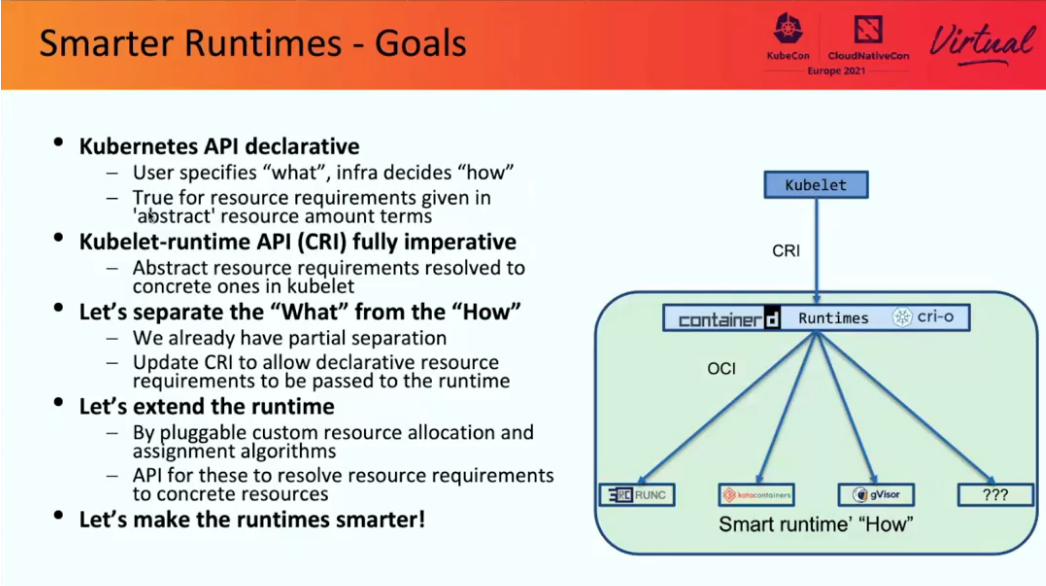

Kubernetes treats its underlying resources as completely homogeneous: one core is one vCPU and every MB of memory is exactly the same. The reality looks a bit different however: different machines have different I/O interfaces, and memory should be close to its CPU (NUMA). Krisztian Litkey and Alexander Kanevskiy presented some interesting proposals to leverage also the last bit of power in your clusters.

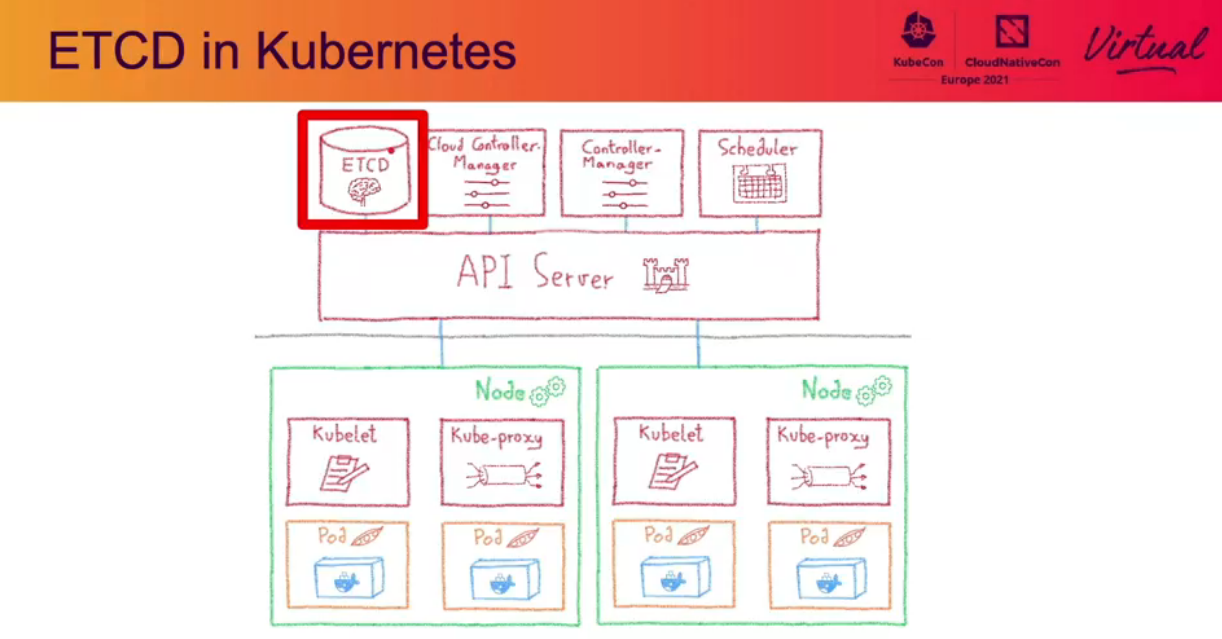

# Operating etcd at scale

etcd is a critical components in Kubernetes clusters, as it holds all the state. In very large Kubernetes cluster, it can become a bottleneck. I personally don’t have these kinds of problems in my clusters, but I find it interesting to know nonetheless. Also, the performance monitoring and optimizations presented for etcd are not specific to Kubernetes.

Happy learning!